Hola! It has been a while since we dove into technical posts. We have been very busy building several deep-learning projects in the mortgage and call center industries, ranging from analyzing bank statements to analyzing human voices.

One of the recent projects called for making inferences with AWS SageMaker’s Serverless Inference infrastructure. In this case, we had a custom-tuned HuggingFace model that intakes a text prompt and an image. The prompt is a question and the image is regarding which the question is for. The documentation on the AWS side can be quite overwhelming; and adding to the difficulty, the input is not as simple as a piece of text. It includes an image as well as a piece of text. Furthermore, to simplify the inference, we used Pipeline.

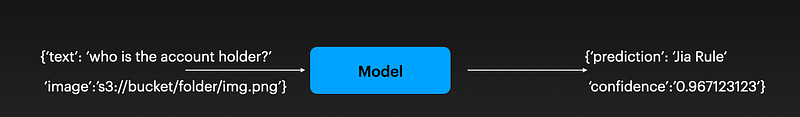

Here is the basic idea:

So we need to translate the input from the endpoint to feed into the model. Fortunately, we can leverage a parameter in HuggingFaceModel called model_data to achieve so. As the documentation stated:

model_data (str or PipelineVariable) — The Amazon S3 location of a SageMaker model data .tar.gz file.Before we jump into that rabbit hole, let’s talk about the basic idea.

There are two parts:

- We need to zip our custom model along with the inference script into a .tar.gz file

- We create a HuggingFaceModel built upon the .tar.gz file on SageMaker, then deploy the model to an endpoint with a configuration that specifies it as serverless

I am drastically simplifying the process as I haven’t talked about creating Lambda, setting up roles and permissions etc to call the endpoint. But I am sure you will figure it out. As the most confusing part is setting up the model and endpoint. We will touch upon some of the trivia as we move on.

First, let’s talk about creating the model file.

The .tar.gz file needs to contain all the model files HuggingFace would use such as .bin file as well as .json file. Let’s assume that all the necessary files are saved to “/model”. The next step is to create a inference file along with these model files called “inference.py”. Here is a sample and I will go over them shortly:

from transformers import AutoTokenizer, AutoModel, pipeline

import json

def model_fn(model_dir):

tokenizer = AutoTokenizer.from_pretrained(model_dir)

model=AutoModel.from_pretrained(model_dir)

p=pipeline("TASK NAME", model=model, tokenizer=tokenizer)

return p

def input_fn(request_body, request_content_type):

if request_content_type == 'application/json':

request_body = json.loads(request_body)

if not bool(request_body.get('text')) or not bool(request_body.get('image')):

raise ValueError("Missing text or image from the input")

return request_body

else:

raise ValueError("This model only supports application/json input")

def predict_fn(input_data, model):

return model(input_data['text'], input_data['image'])

def output_fn(prediction, content_type):

resp_json = {'Output': prediction}

return resp_jsonThe schema of this inference file can be found here.

Per the documentation:

model_fn(model_dir)overrides the default method for loading a model. The return valuemodelwill be used inpredictfor predictions.predictreceives argument themodel_dir, the path to your unzippedmodel.tar.gz.transform_fn(model, data, content_type, accept_type)overrides the default transform function with your custom implementation. You will need to implement your ownpreprocess,predictandpostprocesssteps in thetransform_fn. This method can’t be combined withinput_fn,predict_fnoroutput_fnmentioned below.input_fn(input_data, content_type)overrides the default method for preprocessing. The return valuedatawill be used inpredictfor predictions. The inputs are:input_datais the raw body of your request.content_typeis the content type from the request header.predict_fn(processed_data, model)Overrides the default method for predictions. The return valuepredictionswill be used inpostprocess. The input isprocessed_data, the result frompreprocess.output_fn(prediction, accept)Overrides the default method for postprocessing. The return valueresultwill be the response of your request (e.g.JSON). The inputs are:predictionsis the result frompredict.acceptis the return accept type from the HTTP Request, e.g.application/json.

Before you do anything, I highly recommend you do a test run on your local environment to ensure all these functions are running correctly. I also encourage you to put in more debug logs so you can see whether the models are functioning correctly. The CloudWatch will give you logs, but it is packed with so many things that it is a bit hard to figure out exactly what’s going on in a short period of time.

The basic process of running these functions is quite simple:

- model is loaded with model_fn when the inference is triggered via the endpoint

- the model, in our case, the pipeline is loaded and returned

- next an input is given into input_fn. In our example, we did a simple check and return the dict object

- the dict object and the loaded pipeline is then passed into predict_fn to produce the inference. you can customize this function if you have more complex inference procedure,

- finally, the inference result is then passed to output_fn to massage the data into a specific format. Just like all other functions, you can change it to reflect your unique needs such as adding tokens, attributes etc.

Once the inference.py file is added to your model folder, we need to zip it to a .tar.gz file.

tar -czvf model.tar.gz -C ./model .I suggest you check the content of this .tar.gz file before you upload to S3 since it does take time to upload the big model files with this cli:

tar -ztvf model.tar.gzThe next step is to upload the file to your S3 bucket:

bucket="SOFTMAXDATABUCKET"

s3_prefix="MODELFOLDER"

filename="model.tar.gz"

object_name=f"{s3_prefix}/{filename}"

model_path = f"s3://{bucket}/{object_name}"

import boto3

s3_client = boto3.client('s3',

aws_access_key_id="YOUR ACCESS KEY",

aws_secret_access_key='YOUR SECRET ACCESS KEY',

region_name="us-east-1")

response = s3_client.upload_file(f"./{filename}", default_bucket, object_name)HERE IS A REALLY IMPORTANT THING TO KEEP IN MIND:

YOU MUST ENSURE THE REGION IS THE SAME AS THE IMAGE_URI CONTAINER FOR THE MODEL LATER. IF NOT, THE HuggingFaceModel CANNOT ACCESS THIS MODEL DATA ON S3. IF YOU ARE UNSURE WHAT I AM TALKING ABOUT, JUST MARK DOWN THE REGION_NAME, AND WE WILL GO INTO A BIT MORE DETAIL LATER.

Now our model_data is ready, we just need to create a few things

- model

- endpoint

- endpoint configuration

Before we do all these, we first need to get the image_uri, which is just a ECR image URIs for pre-built SageMaker Docker images.

We talked about this above, it is very important to pull the image_uri from the same region. There are two ways you can do it:

- you can just copy it from AWS documentation by selecting the right compatible packages. For example, if you scroll down to the “HuggingFace Inference Containers” section on the page linked, you will see a number of them. Because Serverless Inference does NOT support GPU, we can only choose the CPU version. here is an example: ‘763104351884.dkr.ecr.us-east-1.amazonaws.com/huggingface-pytorch-inference:2.1.0-transformers4.37.0-cpu-py310-ubuntu22.04’ . You can see this container is pulled from us-east-1, the same as

- you can call sagemaker.image_uris.retrieve function to retrieve the specific image URI by providing parameters such as transformer version, PyTorch version etc. Here is a code snippet for it:

import sagemaker

import boto3

transformers_version='4.37.0'

pytorch_version='2.1.0'

py_version='py310'

region = boto3.session.Session().region_name

image_uri = sagemaker.image_uris.retrieve(

framework='huggingface',

base_framework_version=f'pytorch{pytorch_version}',

region=region,

version=transformers_version,

py_version=py_version,

instance_type='ml.m5.large', # No GPU support on serverless inference

image_scope='inference'

)

Now, let’s emphasize the region again. You need to make sure the region is the same as where your model_data is located on S3. Otherwise, you will see a very unintuitive error when creating the model.

The interesting thing about the function above is that it will tell you the specific version of packages it supports.

Now that we have the image_uri, we need to retrieve the execution_role, which you should have created via IAM beforehand. Note that the following code was run directly via the SageMaker Notebook instance, so I don’t need to provide a boto3 client.

import sagemaker

# Initialize sagemaker session

sess = sagemaker.Session()

sagemaker_role = sagemaker.get_execution_role(sagemaker_session=sess)Now, we need to create a model on SageMaker via:

from sagemaker.huggingface.model import HuggingFaceModel

huggingface_model = HuggingFaceModel(

model_data=s3_location, #this contains inference.py

role=sagemaker_role,

transformers_version=transformers_version,

pytorch_version=pytorch_version,

py_version=py_version,

image_uri =image_uri

)Please note that the s3_location in the function above is the S3 location in earlier code marked as “model_path”. It is a .tar.gz file on S3 that looks like: s3://magicbucket/folder/model.tar.gz

This should take a minute to create; what it does is to create a new model under your SageMaker->Inference->Model, and you can go to your web dashboard to view the model in detail to verify.

The thing to watch out for in this function is all those versions need to be compatible with the container on the image_uri. I had some earlier versions of the transformer that threw a lot of errors when I used the model data I supplied.

Now we have the model created, let’s deploy it. To do that, we need

- create an endpoint configuration

- deploy the model to per the configuration

Let’s view the code:

from sagemaker.serverless import ServerlessInferenceConfig

serverless_config = ServerlessInferenceConfig(

memory_size_in_mb=6144, max_concurrency=2,

)

# deploy the serverless endpoint

predictor = huggingface_model.deploy(

serverless_inference_config=serverless_config

)In this example, I specified it as a serverless inference via ServerlessInferenceConfig. I also tell the config that I can only have a max of 2 concurrency, which denotes the capacity of the endpoint and a memory limit of 6144MB. You can see your options for these parameters here. Keep in mind, if you have a large model, it is probably not a good idea to use the serverless inference setup due to its memory limitation and lack of support for GPU. Consider using a SPOT instance if you need low latency and high persistence while trying to save money.

This will take about 5 minutes or so to create. Once done, you will see under your SageMaker -> Inference dashboard that you have a new Endpoint and a new Endpoint Configuration. You can delete and update these later. We can discuss how to do that in a separate post.

Now let’s make an inference.

Remember, earlier, in our inference.py file, we specified:

def predict_fn(input_data, model):

return model(input_data['text'], input_data['image'])So we need to provide a JSON object with two attributes that look like this:

{

'text':'whose faces are in the photo?',

'image':'https://cdn.softmaxdata.com/ovcslEr8VSS0sreIuvaL/31_20.png'

}Then you can invoke the endpoint via:

predictor.predict(data)The line above was in the same notebook when we created the endpoint. You most likely will not do that when you call it via Lambda.

Your code will looks like this. You can find more details here

import boto3

runtime = boto3.client("sagemaker-runtime")

endpoint_name = "<your-endpoint-name>/string"

content_type = "<request-mime-type>/string"

payload = <your-request-body> in bytes

response = runtime.invoke_endpoint(

EndpointName=endpoint_name,

ContentType=content_type,

Body=payload

)Here we go, I hope this is simple and useful enough for you. I omitted a lot of details and code to simplify as much as I can. If you have any questions, please do not hesitate to leave a comment. I will try to respond. If you need help with interesting projects related to Document Processing, Voice Analysis and Language Geneartion, please reach out to success@softmaxdata.com